Karl asks:

I always hear that photos of cosmological objects (like that photo of the Ring Nebula in your previous post) have been enhanced in some way. What would those things look like if we just saw them exactly as the telescope or camera picked them up? And when the photos are enhanced, are they always enhanced the same way, or does the formula vary?So, it's totally true, the final images given out for press releases have usually been heavily processed from the original raw images. Just like the pictures you took at a party where you throw them into photoshop and remove your friends' red-eye, there are a set of "standard" processing steps for astronomical images.

Finally, does the enhancing serve some scientific purpose, or is it done basically to make the pictures prettier? (I suppose that's a scientific purpose too, in the long view, since pretty pictures make it easier to get funding. Don't worry, I won't tell!)

That said, there's usually not outright deception the way advertisers airbrush images - no astronomer is going to try and make their planet look skinnier or add lolcat tags. To understand this a little better, let's talk about how modern astronomical images are actually taken.

First off, almost all optical images are taken with a Charged Coupled Device (CCD) mounted to the back of a telescope. This is the same kind of chip that's in the back of your ordinary digital camera, albeit more sensitive and more expensive. Essentially, it's just a thin piece of silicon divided into a narrowly-spaced grid of cells. Each cell in the grid can hold electrons which might get excited when a photon hits them. At the end of an exposure, each cell reports how many energetic electrons it contains. Our image just translates each cell into a pixel, and the brightness of that pixel is just how many electrons it contains.

Now, notice there's absolutely no color information here. The CCD just reports the number of excited electrons, and doesn't know anything about whether it was a red photon or a blue photon which excited it...so this just produces a black & white photo. This means we have to use filters if we want to get any color information. If we put, say, a red filter on our CCD before taking the image, then we know only red photons can get through.

So, first we take an exposure with a red filter, then another with a green filter, and then another with a blue filter. We combine them all at the end to produce our fancy color image.

Okay, you're probably already asking, "then how does my digital camera takes color photos all at once without any color filters?" The answer is that it uses filters all the time - here's a schematic of the filter mosaic used in most digital camera CCDs. By filtering alternating pixels with different colors, in only one exposure the camera can get an image in each filter...albeit at lower resolution than the entire grid. The fancy camera software then interpolates these separate staggered color images to produce a single color image.

So with all this said, let's take a look at an actual single raw image of a galaxy:

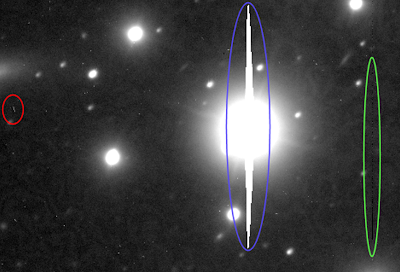

You'll want to click on the image above to look at the original with all its glorious artifacts. Let's also take a look at close-up with some artifacts highlighted:

So, there's several issues we have to contend with to make this into a "pretty picture".

In red, I've highlighted a particularly annoying cosmic ray trail (though they're all over the image). Unlike digital camera photos which only open the shutter for a fraction of a second, astronomical images - particularly of faint objects - can be upwards of an hour long. During this time, high-energy particles known as cosmic rays - which are always whizzing around - have a much greater chance of interacting with your CCD and exciting electrons completely independent of any photons coming through the telescope. The annoying ones come in at an oblique angle to the CCD, leaving a trail of excited electrons across the chip. The even more annoying ones do this directly over the CCD cells you're using to capture an image of your object. Thankfully, there are some pretty good cosmic ray removal packages out there which use sophisticated image detection algorithms to remove this...so that's a processing step right there.

In blue, I've highlighted pixel bleed. We're going for a long exposure of a pretty faint galaxy here, so any bright stars in the field will become oversaturated. In essence, the CCD cell containing the image of the bright star begins to overflow with energetic electrons, pouring them out into adjacent cells.

In green, I've highlighted a row of bad pixels. With millions of cells across the entire chip, statistically many are eventually going to fail. For earth-based observatories, it's untenable to keep throwing out CCDs which cost many thousands of dollars whenever some pixels go out...so you work around it. For spacecraft, meanwhile, there's really nothing you can do about bad pixels even if you had the money to replace it.

There's a couple other artifacts noticeable in the original image, as well. Notice the steady gradient of dark-to-light in the background. Unfortunately, not all the pixels have the same sensitivity. Send 100 photons to one cell, and you might get 50 excited electrons...send them to another cell, and you might only get 40.

You have to account for this by taking "flat fields". Essentially, you take images (ideally just before or just after taking your astronomical images) of a uniformly lit surface with each color filter. The idea is that the surface should be sending out a constant number of photons to each cell, so the only signal you'll see will be the change in sensitivity across the CCD. You then divide the astronomical image by the flat field on a pixel-by-pixel basis to remove this sensitivity effect. Finding a truly flat field, though, can be a chore in itself...often times the best flat field you'll get is an image of the twilight sky before the stars come out.

Another artifact you may notice in the original is the weird wavy pattern, particularly noticeable on the left. Ideally you want your CCD chip to be as thin a piece of silicon as possible - this makes it more sensitive. However, particularly for longer wavelengths of light, photons reflecting off the back surface of the CCD can interfere with photons hitting the front surface and produce thin film interference - very similar to the wavy colored patterns you'll see in soap bubbles or with oil on water. Hopefully this too will be removed by flat-fielding.

Finally, as for the purpose of enhancing images, all of the above steps are necessary to get good science. Otherwise, you're just measuring your signal buried in a whole lot of noise. If you're going to take an image this far, though, you might as well go one step further to make a press release photo.

This serves several purposes, but not least of which is to share your own fascination of an astronomical object with the general public. Imagine if the Hubble Space Telescope *never* made press release photos available and only was used for hard science in the journals...public support wouldn't be nearly what it is today. Besides, it's the taxpayer's dollar which goes to fund it - the least we can do is give them some pretty pictures in return.

So, if you want to make a pretty picture, there's one more step you'll have to take - and this is a big one - because the above image was taken through an infrared filter. By definition, the human eye can't see this wavelength of light, so if we were to represent it in "true-color", the entire image should be black.

Creatively mapping various single filtered images to RGB space as well as some tweaking of colors needs to happen to for this to be visible - and aesthetically pleasing - for human vision. This color manipulation doesn't have the same tried-and-true formula as the above sequence of processing steps, and is often just manipulated until one gets something that just "looks good".

Cool post. Thanks again for more info. I am learning more from your site every day.

ReplyDeleteI agree with Lluvia, I'm learning so much from you. What kinda urks me a bit is that based on this information, I really can't trust the "picture" I get from my camera. Is it an industry thing to give us what we want to see instead of what's really there?

ReplyDeleteThank you, Planetary Astronomer Mike! What a terrifically detailed answer -- once again, I learn even more than I expected to.

ReplyDeleteA couple of questions:

Does per-pixel sensitivity change over time? In other words, is there some reason not to take the baseline flat field on Earth, using some kind of specially-built uniform lamp, before launching the CCD into space? Or would the data gathered by that artifically perfect flat field just become obsolete as pixel sensitivities change over time anyway?

And as for color-mapping down into RGB space: it's interesting that you used the word "creatively" :-). Is there some reason just uniformly upshifting (or downshifting, in the case of ultraviolet) wouldn't do the trick? I'd somehow feel that's more "accurate", since it's just a straight multiplication or division, but maybe that's naive...

P. A. Mike said: "no astronomer is going to... add lolcat tags"

ReplyDeleteI have! Then again, I am an optical physicist rather than an astronomer, so all bets are off.

@Tales from the Ink:

ReplyDeleteBy "can't trust the picture" you mean that your camera interpolates separate color images from a lower-resolution grid of filters?

I don't think it's the industry getting us down so much as an issue that if you did take a picture first with red, then green, then blue filters, in that time your subject would probably have moved in the frame.

This isn't too much of a problem for astronomy since stuff doesn't move that quickly...except for transient events. High-res images of Jupiter can have color blurring since the planet rotates so quickly (only 10 hours).

The other option for digital cameras is the new Foveon CCD:

http://bit.ly/VLaQk

It's technically 3 CCDs stacked on one another. The top CCD only detects blue light and allows green and red light to pass through. The next CCD detects green light and allows red light through. The bottom one detects red light. So, you can get full resolution images in each color with a single exposure and no need for interpolation algorithms.

However, you can expect to pay an arm and quite possibly a leg for this technology.

@Karl Fogel:

ReplyDeleteIt really depends on the specific CCD, but in general, pixel sensitivity changes a lot over both time and temperature. When observing from Earth-based observatories, you almost always want to take flat fields each night, and ideally throughout the course of the night as the temperature changes (if you're really want to calibrate your images well(.

That said, the recently-removed WFPC2 instrument on Hubble is surprisingly stable. Still, getting occasional flat-fields is still necessary...they do this by actually pointing the Hubble back at Earth. Because the Hubble is moving around the planet so quickly, the images are smeared out, producing a pretty uniform field.

As for color upshifting/downshifting, that's generally what's done. However, a lot of observations might be done in, say, 6 different filters including both UV and IR...mapping that to only 3 visible color channels may require some creativity.

It gets even more complicated when some of those filters are extremely narrow for the sake of detecting some property other than just color. For example, the narrow near-IR filter at the 889 nanometer absorption line of methane ultimately probes vertical height in the giant planets. Having only one color dedicated to vertical height seems a little wonky, so scientists will often favor a mapping which is decidedly "false color", e.g. this image of Jupiter:

http://bit.ly/mwCN8

@Bittersweet Sage:

ReplyDeleteIn the interest of full disclosure, I suppose I've done a bit of lolcat-ing myself:

http://bit.ly/Zn0rf

I SO want a telescope!!!

ReplyDeleteThank you for this wonderful post!

ReplyDeleteassignment writing service